Jason Wei was also taken by Zuckerberg: the creator of the Thinking Chain and the founder of the o1 series! This time, they really tapped into the artery of OpenAI

Jason Wei, the proposer of the Thinking Chain and a key figure in the o1 series models, has been recruited by Zuckerberg to join Meta. This news was first revealed by Kylie from Wired magazine and has been confirmed. Jason Wei's Slack account has been deactivated, indicating that he has left OpenAI along with other key members of the o1 team. Jason Wei previously worked at Google and OpenAI, publishing several research papers on large models, and his move to Meta is seen as a significant blow to OpenAI

This time, they have truly dug into the artery of OpenAI.

Jason Wei, the proponent of Chain-of-Thought and a key figure in the o1 series models, has been reported to have been recruited by Zuckerberg and is about to join Meta.

The news was first exposed by Kylie from Wired magazine and has been confirmed by sources.

Kylie also stated that Jason Wei's Slack account _ (OpenAI's corporate WeChat) _ has been deactivated, along with another key figure of o1, Hyung Won Chung.

So, the o1 team, is this going to form a constellation at Meta?

Prior to this, in the face of Meta's recruitment offensive, OpenAI CEO was internally PUA-ing, saying that the truly TOP talents are still there, but some "lower on the list" are "profit-driven."

However, now, if the renowned Jason Wei is also switching to Meta.

This is not just about TOP or not, it’s simply digging into the artery.

Proponent of Chain-of-Thought Recruited by Zuckerberg

Chinese scientist Jason Wei, the proponent of "Chain-of-Thought," is a key figure behind o1.

He studied undergraduate at Dartmouth College in the United States, majoring in computer science, and his thesis advisor, Professor Lorenzo Torresani, currently serves as a scientist at FAIR.

After graduating in 2020, Jason Wei joined Google.

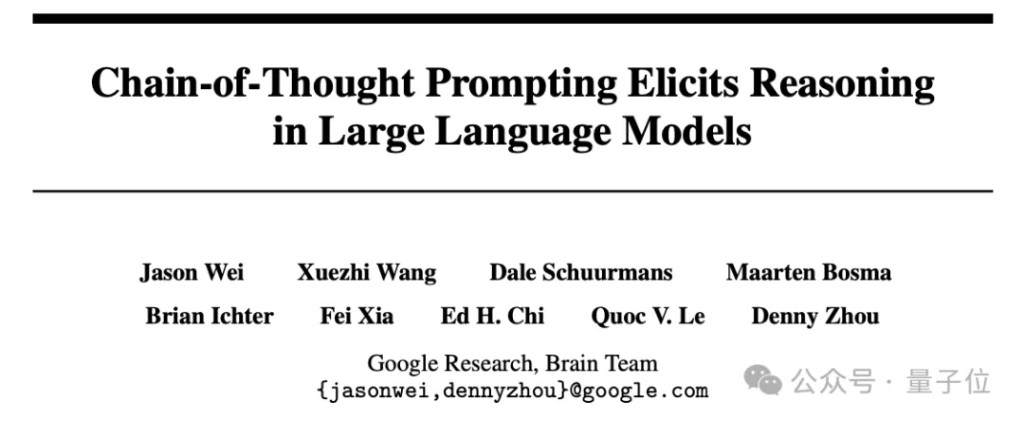

In 2022, while still at Google Brain, Jason Wei published the seminal work on Chain-of-Thought titled "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models" as the first author.

During his time at Google, Jason Wei promoted key technologies for large models such as Chain-of-Thought and instruction tuning And he also published research on the emergence phenomenon of large models together with PaLM author Yi Tay and Google's chief scientist.

In 2023, Jason Wei joined OpenAI and later became a key figure behind important products like o1 and Deep Research.

At last year's o1 "meet and greet," Jason Wei was seated in the front row center position, next to another key figure of o1, Zhao Shengjia, who has now also been "recruited" by Zuckerberg.

Another rumored important figure, Hyung Won Chung, is also a key member of o1 and led the training work for CodeX mini.

Hyung Won Chung is from South Korea and obtained his Ph.D. from the MIT Computer Science and Artificial Intelligence Laboratory in 2019.

Before joining OpenAI, Chung also worked at Google Brain, focusing on solving bottlenecks encountered during the scaling of large models and built a large-scale training system based on JAX.

Both have worked at Google, are core contributors to the o1 and Deep Research projects at OpenAI, and have a close relationship, having given lectures together at Stanford University to share their research achievements in the field of large models.

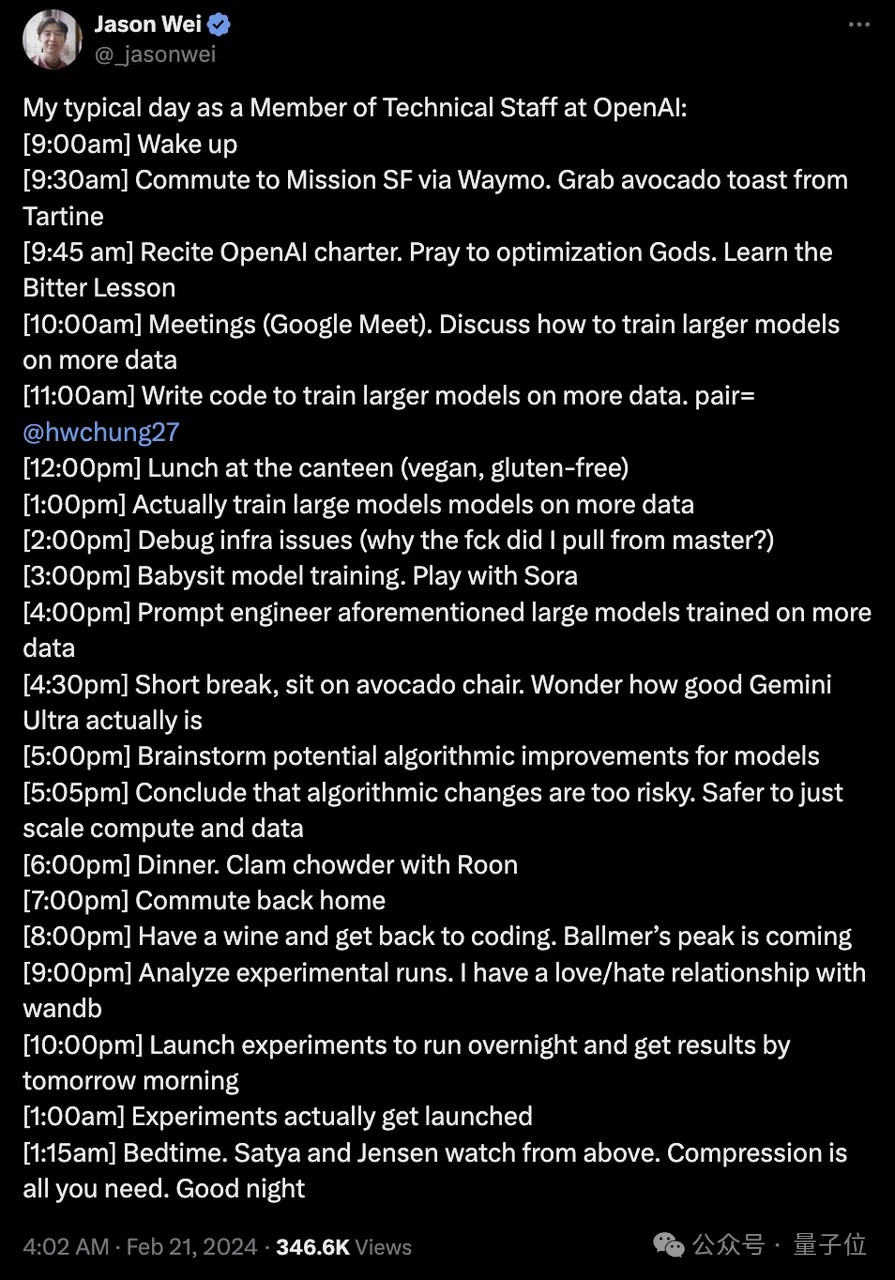

Jason even specifically mentioned Chung in his 996 diary.

Moreover, Jason has also written a lengthy article to publicly express his gratitude to Chung.

This also makes the possibility of Chung being poached look higher.

Why is Zuckerberg so good at poaching?

The fact that key figures from OpenAI are continuously "defecting" shows that Zuckerberg has indeed put in the effort and given them the respect they deserve.

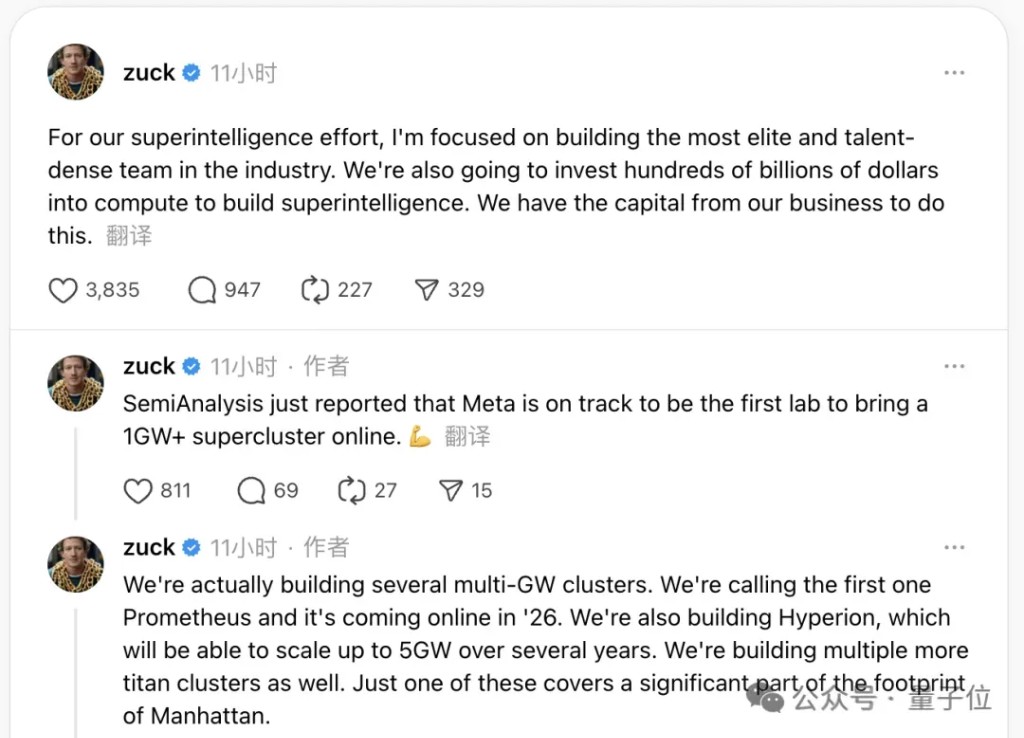

Initially, Ultraman said that those who were poached were "only after profit," but OpenAI's series of moves, including holidays, salary increases, and stock incentives, have failed to retain these talents Amidst widespread speculation, Mark Zuckerberg personally provided a positive response: they joined Meta not for the sake of money, but to build a god—build god, the ultimate AGI.

Meta's Superintelligence Lab offers unimaginable support to top AI talents—reporting directly to Zuckerberg, with unlimited access to the strongest GPUs!

To this end, Meta is ramping up the construction of data centers, aiming to become the first lab to launch a 1GW+ supercomputing cluster.

Of course, in addition to Zuckerberg's external offensive, there are also internal incentives from OpenAI. A popular blog expressed reflections on the internal situation at OpenAI.

The author of this blog is Calvin French-Owen, an engineer who participated in the development of Codex, who announced his departure from OpenAI three weeks ago.

Calvin mentioned in the blog that his reason for leaving was to return to the role of a startup founder (Calvin was a co-founder of the customer data startup Segment).

However, more importantly, he reflected on OpenAI's working model, acknowledging the pragmatic spirit with few formalities in its work style, but also detailing many shortcomings within OpenAI, from personnel to technology.

First is the astonishing rapid growth and the chaos that followed.

During his more than a year at OpenAI, Calvin witnessed the company's explosive expansion from 1,000 to 3,000 employees.

Behind the prosperity, internal communication, reporting structures, product release processes, personnel management, organization, and recruitment processes all faced challenges.

At the same time, there was a prevalence of "horse racing" within OpenAI, with high work intensity and significant pressure.

For example, the Codex team, where Calvin worked, built and released a product from scratch in just seven weeks, with 996 work hours being the norm, and almost "no sleep."

Calvin described this sprint for Codex as the "hardest he has worked in nearly a decade," often working late into the night, waking up early in the morning, and spending most weekends working.

On the technical side, OpenAI's codebase has also become a * mountain.

Calvin explained that the code quality in OpenAI's core codebase is uneven, and there is no large-scale enforcement of style guidelines.

It includes code written by experienced former Google engineers capable of handling billions of users, as well as temporary Jupyter Notebooks written by newly minted PhDs This leads to frequent errors in the code or excessively long running times.

In Calvin's words, although OpenAI is expanding in scale, it "has not yet realized that it is a giant company," and the accompanying model has not completed the transition from a startup to a large company.

Calvin believes that this situation is very similar to early Meta, after all, the talent flow between Meta and OpenAI was almost completely opposite to what it is now.

So this heartfelt "reflection" blog also indicates something on another level—

It's not that Zuckerberg is too aggressive, but rather that Ultraman indeed has limited capabilities, and Ilya has brought him to a height he should not have reached.

Risk Warning and Disclaimer

The market has risks, and investment requires caution. This article does not constitute personal investment advice and does not take into account the specific investment goals, financial situation, or needs of individual users. Users should consider whether any opinions, views, or conclusions in this article align with their specific circumstances. Investing based on this is at one's own risk