For the first time in history! "AI vs Humans" work capability assessment, the results are not so good for humans

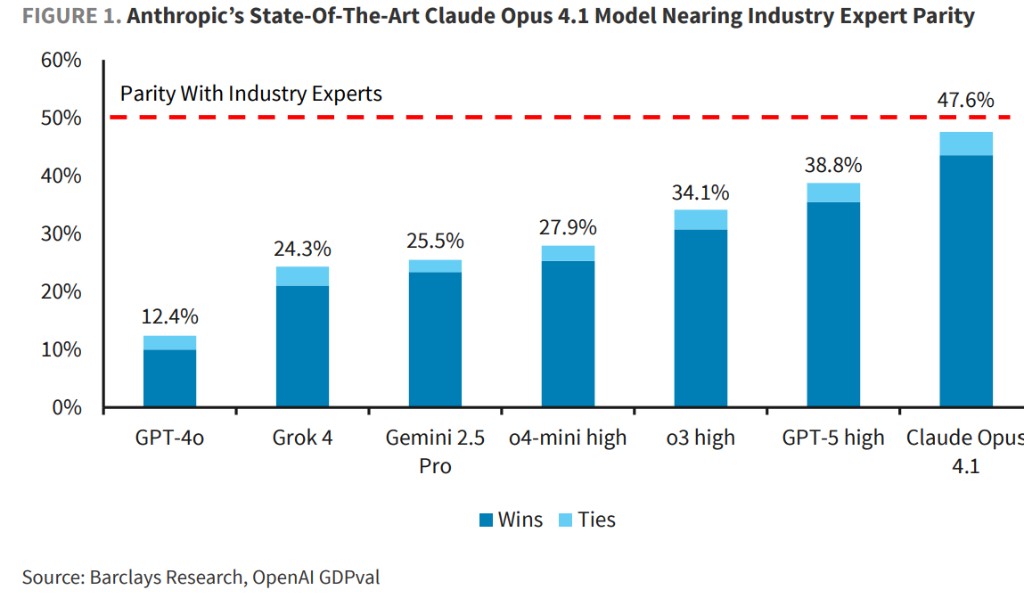

Barclays analysis shows that top AI models are nearing human expert levels, with Claude Opus 4.1 leading with a 47.6% win rate. AI has surpassed humans in areas such as retail trade and has performed excellently in professions like software development. Even more astonishingly, AI capabilities have tripled in 15 months, showing a linear growth trend. It is predicted that within the next 12-24 months, AI will fully surpass human experts in most work tasks

OpenAI's latest released GDPval-v0 evaluation tool has quantified for the first time the ability of AI to perform economically valuable work tasks, showing that AI is rapidly catching up to and even approaching the level of human professionals. Barclays stated that the most advanced AI models have reached capabilities comparable to human experts in many occupational tasks, and the speed of this capability enhancement is accelerating.

According to a previous article by Jianwen, OpenAI has recently launched a new assessment tool called GDPval-v0, covering approximately 1,300 specific work tasks across 44 occupations in nine major business sectors that account for a significant portion of the U.S. GDP, ranging from legal documents to engineering blueprints to nursing plans and other real work deliverables.

The results show that the current top AI models have capabilities comparable to human professionals in executing many occupational tasks, and the speed of this capability enhancement is accelerating. On October 5th, according to Hard AI news, Barclays stated in its latest research report that Anthropic's Claude Opus 4.1 achieved a "win or tie" rate of 47.6% in comparison with human experts, ranking first.

Barclays analysts believe that the "win rate" of AI models has increased linearly by about four times over the past 15 months, and it is expected that within the next 12-24 months, AI will surpass humans in most work-related tasks. This breakthrough provides critical data support for assessing the return on investment in AI.

Innovative Breakthrough in Evaluation Standards: Simulating Real Work Complexity

According to the Barclays research report, the core innovation of the GDPval benchmark test lies in its authenticity and complexity.

This evaluation was designed by senior professionals with an average of over 14 years of industry experience, covering 1,230 professional tasks across industries such as technology services, finance and insurance, healthcare, information technology, and manufacturing.

Unlike traditional benchmark tests, the tasks in GDPval are not simple text Q&A but involve complex scenarios with reference documents and context, requiring AI to deliver diverse outcomes, including documents, slides, charts, and spreadsheets. Barclays pointed out that this design is more aligned with the complexities of real work environments.

The evaluation was conducted in a blind testing manner, with industry experts ranking the work outputs generated by AI and humans based on dimensions such as difficulty, representativeness, completion time, and overall quality.

AI Performance Approaching Human Expert Level

Barclays' analysis shows that the current most advanced AI models have approached or reached human expert levels in multiple fields. Claude Opus 4.1 leads with a win rate of 47.6%, followed closely by GPT-5-high at 38.8%, and o3 high at 34.1%.

From an industry perspective, AI outperforms human experts in retail trade (56% success rate), wholesale trade (53%), and government departments (52%), but performs relatively weakly in the information technology sector (39%).

At the occupational level, AI performs best in tasks for counter and leasing clerks (80%), transportation receiving and inventory clerks (76%), and software developers (70%), while performing poorly in tasks for industrial engineers (17%) and film editors (17%).

Different models exhibit distinct characteristics: Claude Opus 4.1 excels in aesthetic performance (format and layout), while GPT-5 is the most accurate in following instructions and executing precise calculations.

Remarkable Speed of Capability Improvement

The Barclays report particularly emphasizes the speed of AI capability enhancement.

The research report states that OpenAI models have improved their performance in the GDPval test by more than three times within 15 months, and this linear growth trend indicates that AI is likely to fully surpass human experts in the short term.

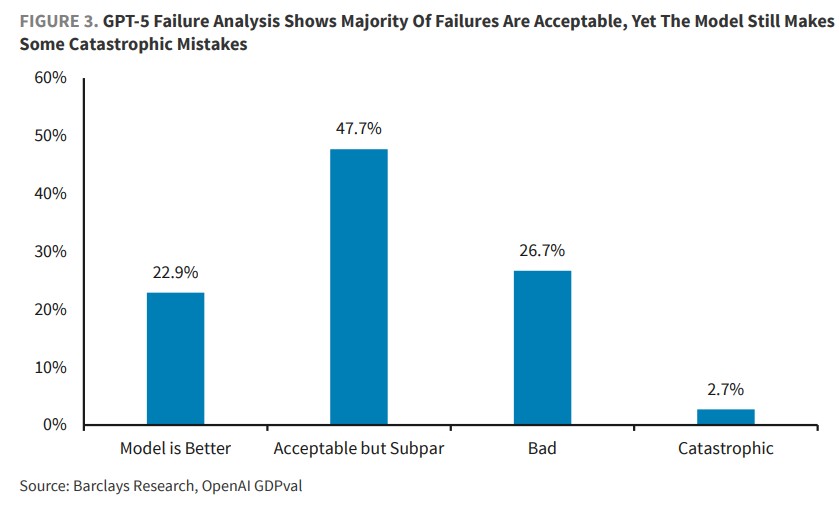

An analysis of GPT-5's errors shows that, although the model still makes some catastrophic mistakes (2.7%), 47.7% of the errors are classified as "acceptable but not good," and in 22.9% of cases, the model performs even better than humans.

Barclays analysts believe that the raw intelligence of AI models, especially GPT-5, has reached a level that surpasses human experts. With more post-training (fine-tuning, reinforcement learning), the era of AI fully surpassing industry experts is not far off