NVIDIA continues to write the myth of AI computing power! DGX Spark makes a grand debut, bringing data center-level computing power to the desktop

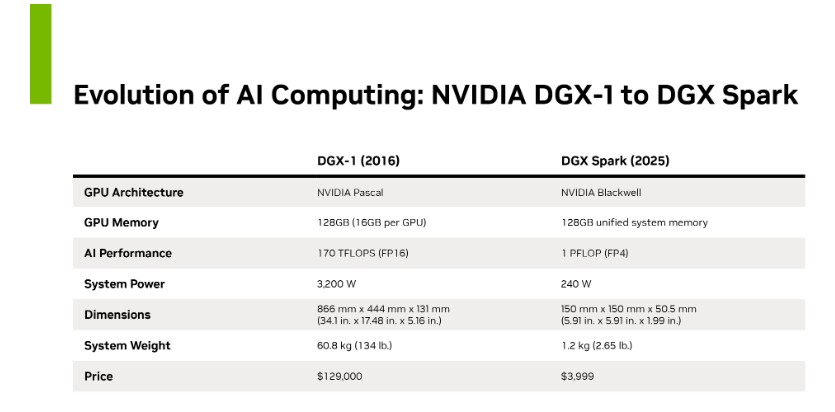

NVIDIA has launched the world's smallest AI supercomputer, DGX Spark, aimed at providing desktop-level supercomputing performance for small and medium-sized enterprises and AI developers. This product is based on the NVIDIA Grace Blackwell architecture and is expected to become a new growth point, potentially impacting the target stock price of $300. NVIDIA's stock price has risen 40% this year, with a market capitalization stabilizing at $4.6 trillion, continuing to lead the investment boom in the global AI computing power industry chain

According to the Zhitong Finance APP, the "AI chip giant" NVIDIA (NVDA.US) is set to officially launch the world's smallest AI supercomputer on Wednesday local time, offering an AI stack based on NVIDIA's Grace Blackwell architecture in a compact desktop form. The emergence of this seemingly mini version of a high-performance AI server, dubbed the "world's smallest AI supercomputer," suggests that NVIDIA may be on the brink of an incredibly strong new growth point, making it possible to hit the $300 target stock price recently set by Wall Street financial giant Cantor Fitzgerald—corresponding to a market value of over $7 trillion.

At the same time, this significant technological development from NVIDIA largely indicates that the current "super bull market" in the global AI computing power industry chain, dominated by NVIDIA, TSMC, Broadcom, and Micron Technology, is far from over. This industry chain is expected to remain the most favored investment sector for global capital in the near future. NVIDIA's stock price has repeatedly hit historical highs this year, with an increase of up to 40%, currently hovering around $188, and its market value stabilizing at $4.6 trillion, consistently ranking first in the global market value leaderboard.

The latest AI supercomputer launched by NVIDIA, named "Nvidia DGX Spark," is claimed by NVIDIA to provide enterprise data center-level supercomputing performance, equipped with the company's latest GB10 Grace Blackwell super chip, as well as its ConnectX-7 high-performance networking capabilities and NVIDIA's exclusive AI software stack.

The core idea behind NVIDIA's launch of the DGX Spark small AI supercomputer is to provide small and medium-sized enterprises and AI developers with a quick way to access AI supercomputing systems on the PC side, without the need to spend millions of dollars renting cloud-based AI data center computing power services based on NVIDIA's Blackwell AI server clusters or purchasing their own AI server rack systems. In contrast, the DGX Spark is priced at just $3,999.

Just like the first batch of DGX-1, the first batch of Spark is also delivered to Musk

Spark strictly belongs to a new type of personalized AI supercomputer that NVIDIA is actively launching in collaboration with third-party partners (including ASUS and Dell), allowing B/C-end customers to conveniently develop and use AI large models/AI application software in real-time on their local PC devices.

NVIDIA stated that Spark can be equipped with up to 128GB of massive memory, which should provide significant assistance when running large-scale and extremely resource-intensive AI large models.

"Looking back over the years, it was in 2016 that we successfully created the world's first high-performance AI server system—DGX-1, providing AI large model researchers at the time with an AI supercomputer that they could deploy at any time. I personally delivered the first AI server system to one of the co-founders of a small AI startup called OpenAI, Elon Musk—thus giving birth to the epoch-making ChatGPT and igniting the global AI revolution," said Jensen Huang, CEO of NVIDIA, in a statement "The DGX-1 has opened the era of AI supercomputers and unlocked the scaling laws that drive modern AI large models. With DGX Spark, we will fully return to this mission—distributing an AI supercomputer to every AI developer, helping them ignite the next wave of breakthroughs in AI technology."

Similar to the initial delivery ceremony of the DGX-1, just as Elon Musk is destined to lead humanity towards AGI or ASI, Jensen Huang personally delivered the world's first "Nvidia DGX Spark" supercomputing system this week to Musk, who has left OpenAI to establish the emerging AI application force xAI.

The company also has a DGX Station with a larger physical scale and more powerful performance, running desktop-level super AI chips based on Nvidia's GB300 Grace Blackwell Ultra architecture.

What is Spark's "unique selling point" compared to the expensive Blackwell AI server computing clusters?

However, investors and developers should not expect that simply obtaining a Spark or Station system will equate to fully running trillion-parameter-level AI large models or other AI application software that requires massive computing resources—Spark or Station cannot perfectly replace the incredibly expensive Blackwell AI server racks (rack-level DGX GB200/GB300 NVL72) that are jointly constructed to run trillion-parameter AI server computing clusters.

This latest small AI supercomputer runs Nvidia's own DGX OS based on the Linux operating system and its exclusive AI software stack, specifically designed to assist developers in building and running AI large models on the PC side at any time, while also enabling some PC users to run medium and small AI large models based on local devices.

Nvidia also stated that users can choose to connect two Spark AI supercomputer systems, allowing them to run larger AI large models with parameter scales of up to 405 billion.

Spark and Station are designed to complement Nvidia's existing expensive AI server cluster products, enabling users to complete part of the prototype development and technical fine-tuning of AI large models on the edge before broader deployment.

From the perspective of AI engineers, the world's smallest AI supercomputer named "Nvidia DGX Spark," based on the combination of "unified memory + desktop form factor + enterprise-level software stack," allows them to perform inference and fine-tuning of models ranging from 70B to 200B directly on their local devices within offline/compliant data boundaries, and seamlessly migrate to large-scale clusters for reproduction and expansion The overall performance positioning of DGX Spark is "desktop size, data center-level experience": a single machine peak of about 1 PFLOP (FP4, including sparsity), 128GB unified memory, built-in DGX OS (CUDA X-AI full stack), standard ConnectX-7 200Gb/s and NVLink-C2C (CPU-GPU synchronous bandwidth approximately equal to 5× PCIe 5), and Grace-Blackwell super chip. It can perform local inference/fine-tuning of contemporary large models, supporting up to about 200 billion parameters for large models on a single machine.

When two DGX Sparks are interconnected, they can reach approximately 405 billion parameters, with a starting price of just $3,999 for a single DGX Spark. Therefore, its biggest selling point is not "raw computing power competition," but rather packing available strong computing power + unified memory + enterprise-level software stack into a desktop, significantly lowering the technical threshold for "from local AI prototype to online deployment," while seamlessly connecting with DGX Cloud/data center environments.

In other words, DGX Spark can be said to bring data center-level experience down to the desktop, allowing users to handle workloads of 70B to 200B scale (inference/fine-tuning/evaluation/distillation) in minutes. Coupled with DGX OS + CUDA X-AI, which is isomorphic to the data center DGX environment, it greatly shortens the path "from AI technology ideas to the laboratory." Thus, NVIDIA's DGX GB200/GB300 updates are "large AI computing power factories," while DGX Spark is more like "developers' personal/team-level AI workshop." The two are not in a substitutive relationship but rather a strong complementary relationship based on the "prototype-production" relationship.

With the new growth momentum brought by Spark, NVIDIA's bull market trajectory continues

There is no doubt that with its powerful GPU processor system and exclusive CUDA software ecosystem, NVIDIA still holds an absolute leading position in the global AI computing power race. In terms of performance scale, Spark will undoubtedly be NVIDIA's new growth point and amplifier of the AI ecosystem.

In addition to the newly launched desktop AI system, the company is also continuously reaching massive deals with AI leaders, including a colossal investment of $100 billion in OpenAI, where the developer of ChatGPT will procure up to 10 gigawatts of NVIDIA AI server clusters.

Last month, NVIDIA signed a significant agreement with CoreWeave (CRWV.US), a leader in cloud AI computing power leasing, which will receive AI computing power orders worth $6.3 billion from this chip giant.

Recently, NVIDIA has also provided enormous AI GPU computing power cluster devices to xAI, one of the competitors founded by Elon Musk, as well as to Amazon, Google, Meta (Facebook's parent company), Microsoft, and many other tech giants. Not to mention the $500 billion Stargate Project jointly created by OpenAI, Oracle, and SoftBank—starting in 2025, OpenAI, Oracle, and SoftBank will jointly promote a large AI infrastructure project known as the "New Era Manhattan Project." DGX Spark undoubtedly represents NVIDIA's new growth vector in "local/personal-level AI supercomputing systems," resembling a front-end acceleration penetration + ecological expansion, which will significantly strengthen its exclusive "Nvidia AI hardware-software integrated ecosystem" and the AI developer ecosystem moat. The larger scale of performance-driven growth will undoubtedly still come from server rooms/supercomputing-level deployments and ultra-large single computing power infrastructure (such as the 10GW-level projects brought by OpenAI). This is also the core logic behind Cantor Fitzgerald raising NVIDIA's target stock price from $240 to Wall Street's highest target price of $300.

In the view of top Wall Street institutions like Cantor, NVIDIA will still be the core beneficiary of the trillion-dollar wave of AI spending. These institutions believe that NVIDIA's stock price's repeated creation of historical highs is far from over. Recently, Wall Street analysts have continuously raised NVIDIA's 12-month target stock price, and the latest average target price on Wall Street implies that NVIDIA's total market capitalization will break the $5 trillion milestone within a year.

As the "highest market capitalization company in the world," NVIDIA can be regarded as the "leader" of the global AI computing power industry chain. Therefore, in the eyes of institutional and retail investors, NVIDIA's strong upward momentum, which continues to set new highs, indicates that this round of "super bull market" in the global AI computing power industry chain is far from over, and this industry chain will remain the most favored investment sector for global funds in the near future.

According to Wall Street financial giants Cantor, Citigroup, Loop Capital, and Wedbush, the global artificial intelligence infrastructure investment wave centered on AI computing hardware is far from over; it is only at the beginning. Driven by an unprecedented "AI computing power demand storm," this round of AI investment wave is expected to reach a scale of $2 trillion to $3 trillion. NVIDIA CEO Jensen Huang even predicts that by 2030, AI infrastructure spending will reach $3 trillion to $4 trillion, and the scale and scope of these projects will bring significant long-term growth opportunities for NVIDIA