The "New Decade" of China's Computing Power Chips

China's computing power chips enter a new decade, having experienced a cycle of self-research and abandonment over the past 40 years. In the last five years, complete machine and platform manufacturers have returned to self-research, promoting heterogeneous computing of CPUs and xPUs. The new plan emphasizes technological self-reliance and strength, focusing on key technologies such as semiconductors. In the next five years, the breakthrough for domestic computing power chips lies in a unified instruction system architecture, with RISC-V expected to become the standard, promoting architectural innovation and efficient resource utilization

Over the past 40 years, processor chips have shown a "negation of negation" spiral development path: self-research - abandoning self-research - self-research.

In the last 5 years, more and more complete machine and platform manufacturers have rejoined the "chip war" of self-research, and a new trend has emerged — the CPU-centered homogeneous computing system is transforming into a heterogeneous computing system combining CPU and xPU.

Players in the "chip war" face several questions: how much innovation is there in their xPU architecture, how much room for continuous innovation exists, can application scale dilute hardware costs, and what are the innovation costs of the ecosystem.

The recently released draft of the "14th Five-Year Plan" also mentioned accelerating high-level self-reliance in technology, comprehensively enhancing independent innovation capabilities, vigorously implementing "bottleneck" iterative tackling, and focusing on key technology areas such as semiconductors. So, in the next five to ten years, where will the breakthrough for domestic "computing power chips" be?

We believe it lies in the unification of instruction system architecture (instruction set architecture).

The consistency of system architecture can better promote innovation at the architectural level, such as using RISC-V as a unified instruction system, where all CPU/GPU/xPU are developed based on RISC-V and its extensions, efficiently utilizing R&D resources while expanding scale effects.

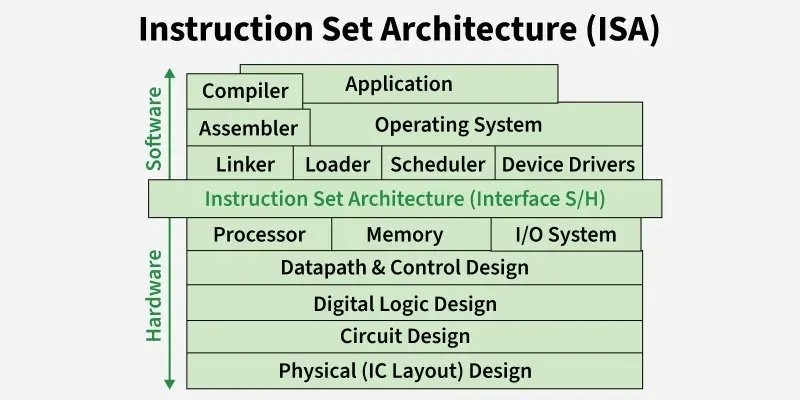

The instruction set acts as a "connector" between software and hardware, allowing software written according to standards to issue computational instructions to hardware.

01 Economic Scale and Ecological Costs Determine the "Life and Death" of Architecture

Computers have nearly an 80-year history, characterized in the early days by centralized processing, where only a few professionals could access expensive computing resources through terminal devices.

After the 1980s, PCs and computer networks based on microprocessors emerged, transforming the computing model from centralized to distributed. Later, smartphones and cloud computing systems evolved the computing model into a complex system composed of centralized cloud centers and "ubiquitous distribution" of smart terminals, with the cloud center itself being a massive distributed system.

As computing has evolved to today, the core CPU is represented by two dominant instruction sets: the x86 architecture in the PC and server domains, and the ARM architecture in the smartphone domain.

The dominance of x86 and ARM is the result of market reshuffling.

Looking back over the past 40 years, many distinctive architectures and products have emerged in the industry, but most have gradually withered away. For example: Intel's RISC architecture i860/i960, Motorola's 68000, and the PowerPC architecture jointly developed with IBM and Apple, among others The reasons for the rapid convergence of instruction set architectures, from dozens to just a few, vary.

The victory of x86 over RISC relies on continuously "copying homework" from high-end RISC while constantly adding instruction subsets to expand new functionalities based on new application demands; both PC and server CPUs are based on the x86 architecture, with large chip shipment volumes that help share the R&D costs of server CPUs, which is also an objective condition for x86 to stand out from the competition.

The disappointment of RISC CPUs is superficially due to the enormous costs of software and hardware investment, but fundamentally stems from the inability to disrupt the existing software and hardware ecosystem—a large number of existing standards or de facto standard interfaces, such as instruction architecture systems, whose dominance could not be shaken even by coalitions like Intel and HP.

Recalling the supercomputing field in the 1990s, companies like nCUBE, KSR, and Thinking Machines developed their own CPUs and MPP supercomputing systems, proposing many intriguing new solutions in system architecture. In particular, KSR introduced a pure cache storage architecture called Allcache (COMA), achieving the first parallel supercomputing system based purely on cache, with its CPU running at only 20MHz, while its efficiency in power consumption and heat dissipation far exceeded that of Intel's 486 at the time, which had a clock speed of 50MHz.

Ultimately, various innovative architectures "lost to" x86.

This is not to say that these architectures lacked innovation; fundamentally, architectural innovation cannot compete with economic laws. Therefore, at the beginning of this article, we call for a unified instruction set architecture for China's computing power chips in the next five to ten years.

02 Architectural innovation is difficult, ecosystem building is even more challenging: barriers lie in software and collaboration

Intel launched the Pentium 4 processor around the year 2000, with clock frequencies reaching 4GHz. Twenty-five years later, many products still remain at this level of clock frequency. This is because after entering the nanometer process, Moore's Law gradually became ineffective, and the switching speed of transistors slowed down.

Now, the industry relies on the accumulation of transistor numbers to enhance performance, with the basic idea being parallelism, such as increasing data bit width, adding functional components, and increasing the number of processor cores, etc. But with so many components added, how to control and manage them? This depends on computer architecture.

Computer architecture serves as the interface between hardware and software and determines the division of labor between hardware and software. Based on the different logic of hardware and software division, it can be roughly divided into three types:

- Radical structure (fully dynamic optimization): Similar to the pure cache storage architecture mentioned above, it emphasizes that software has limited capabilities in dynamic analysis and optimization, thus making as many dynamic optimizations as possible in hardware, but this often leads to overly complex hardware and excessive power consumption;

- Conservative structure (static optimization): Hardware only provides the necessary facilities, such as a large number of registers or SRAM, relying on software to achieve high performance. The advantage of this solution is that hardware is simplified, while the downside is that programming is inconvenient and performance is not guaranteed;

- Compromise structure (optimization combining dynamic and static): Hardware makes some dynamic optimizations, such as cache, while software still has room for optimization, solving performance and programming issues through hardware-software collaboration.

Due to the need to run operating systems, compilers, and various complex control applications, there are many serial factors involved. High-end CPUs often adopt aggressive structures, but due to the extremely complex architecture, it becomes difficult to verify correctness, resulting in a huge R&D workload. With the emergence of hardware vulnerabilities like Spectre and Meltdown, these structures are also exposed to issues of transient execution attacks.

Currently, the industry tends to favor this structure—adding the number of processor cores to enhance performance, such as the xPU chips that represent computing power, which are typical many-core structures. This architecture can match the characteristics of image processing, neural networks, and other applications that inherently rely on parallel computing. As long as the hardware provides sufficient computational units, storage units, and interconnection mechanisms, and the software programs express parallelism, it can execute at high speed on parallel hardware.

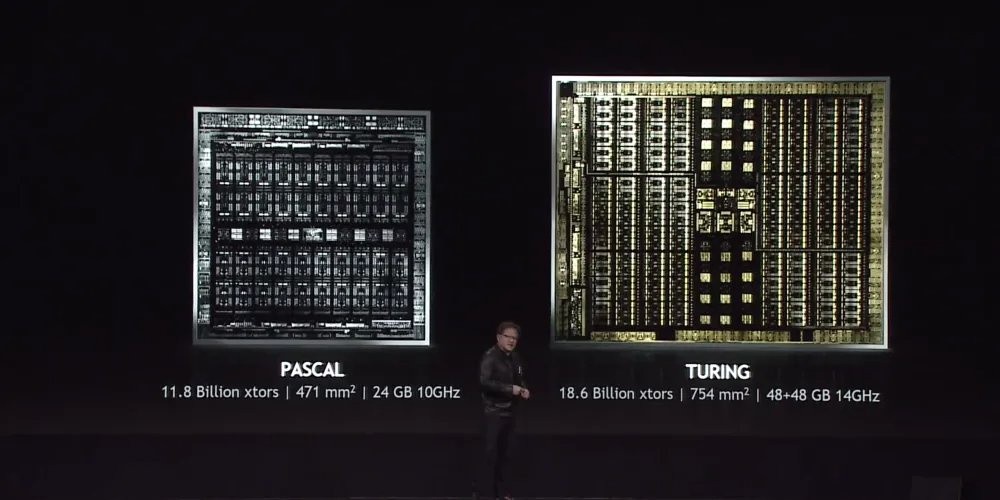

NVIDIA PASCAL and TURING architecture GPUs have a large number of CUDA computing cores.

Intel Xeon Phi, Google TPU, etc., are specially designed many-core accelerators, while the more popular GPGPU has inadvertently become a many-core accelerator—initially used only for graphics rendering, not specifically designed for AI.

Whether it is TPU or GPGPU, the large-scale application of the many-core structure xPU's "computing power chips" must first solve the ecosystem problem—the totality of various software running on the processor, including various application software, as well as system software, middleware, library functions, etc., that support the development and operation of application software. In this regard, users prefer NVIDIA products because CUDA has a mature parallel software ecosystem.

Earlier, the collaboration project between Intel and HP was mentioned. In 1994, the two parties jointly developed the IA-64 Itanium processor with an EPIC architecture that is not compatible with x86, which took more than a decade and incurred huge costs, ultimately failing. The key lies in the fact that after more than 40 years of evolution, the x86 architecture has formed an industrial ecosystem that no other processor architecture can compare to or replicate.

Intel and HP's new architecture and new products cannot solve the new ecosystem problems.

There is also an insight here—Gartner analyzed the enterprise software market from 2009 to 2018, and over the decade, the market share of x86 software continued to rise. By 2018, global spending on x86 software development (including enterprise application software, infrastructure software, and vertical-specific software) reached $60 billion. IDC's data for the same period in 2019 indicated that the total revenue of global server hardware was only $80 billion In other words, the cost of software development is far higher than that of hardware. Developing a new processor architecture is already very expensive, and basically, no one will invest more to develop supporting software.

Based on this, it can be predicted that for a long time to come, x86 CPUs will still dominate the server market.

Some may ask, where are the opportunities for ARM-64? Its core focus lies in breaking Intel's monopoly in the x86 server market, as Intel's CPU gross margins are too high, and everyone wants to share the pie, just like AI chip manufacturers want to take a piece of Nvidia's cake.

Ultimately, several reasons may be decisive for the future success of ARM servers:

- First, large companies that control the full-stack technology (applications) abandon x86, such as Apple and Amazon. Their ecosystem migration is completely controllable, and production or gross margins are also controllable.

- Second, the integration of edge and cloud, where ARM's advantages at the edge permeate to the cloud, such as Android Cloud. ARM servers are more suitable for supporting Android Apps, and applications can migrate freely between the cloud and the edge. Moreover, cloud gaming does not need to rely on virtual machines in the cloud.

The story of x86 continues, ARM's offensive is rapid, and open-source RISC-V still needs to work hard.

Regarding RISC-V, there are many discussions in the industry, including the issue of ARM being "expensive," as well as openness and researchability, but the main issue remains the commercialization dilemma.

In recent years, the applications of RISC-V that have been relatively successful are mostly in scenarios with simpler software, specifically in the embedded field represented by microcontrollers (MCUs), such as storage products from companies like Seagate and Western Digital. In embedded scenarios represented by the Internet of Things, the demand is very fragmented. Although RISC-V can customize instruction set extensions based on application characteristics, categorically customizing chips loses the scale effect of the integrated circuit industry.

Beyond software and applications, the hardware ecosystem of RISC-V is still immature—there are few types of competitive, cost-effective processor cores, and there is a lack of high-performance on-chip networks (NOC) that support multi-core interconnects. Especially for on-chip networks, the industry is still using ARM solutions, but ARM will not separately license on-chip network IP to RISC-V projects; instead, it will bundle ARM CPU cores, which raises costs.

Some viewpoints suggest that as cross-platform languages/tools like JAVA and Python become more popular, achieving cross-platform migration of applications through virtual machine technology, and simulating one instruction set with another through emulation, there is hope that the importance of instruction set architecture will diminish, thereby restructuring the "monopoly" pattern of x86 and ARM.

However, there are also some contrary facts, such as Intel continuously expanding its instruction set and adding new instructions (subsets), such as the recently seen SGX, AVX512, and AI extension instruction sets, indicating that direct support for hardware instructions is crucial for performance and energy efficiency For example, due to various types of foundational software and application software in the industry, which are mainly optimized for Intel CPUs, even with AMD CPUs that also use x86 architecture, the variety of software configurations that can run smoothly is much less. Therefore, Alibaba's public cloud platform only uses Intel's CPU products, which can easily support various old OS types, versions, and configurations.

From this perspective, RISC-V has a long way to go to enter the general platform represented by computers.

03 Unified Instruction Set: The Key Path for China's Computing Chip Scaling

In recent years, system and platform manufacturers have begun to develop computing chips again: in the United States, there are companies like Apple, Google, Amazon, and Microsoft, and there are many companies in China as well.

Among all self-developed scenarios, the model of cloud vendors developing their own chips is feasible because the foundation of corporate profitability lies in value-added services, not hardware. Cloud vendors, due to their control over the entire stack of software and hardware, face fewer difficulties in ecological transplantation, and because of their large scale, they can afford the costs of chip development.

However, at this stage, most companies' self-development is still used internally, so external customers still need independent chip suppliers.

Among the many system manufacturers developing their own chips, Apple is a very successful case, having basically achieved full self-development of its core product line processors—A series for mobile phones, M series for tablets and PCs, W series for watches, and H series for headphones.

Apple's self-developed chip matrix, data updated to September 2025

"High product pricing" can serve as a superficial indicator to judge the success of Apple's self-development.

Compared to the public version of ARM CPU cores, Apple's self-developed CPUs have high performance and high costs, but when paired with self-developed system software, they achieve an optimized user experience overall. Coupled with the support of a marketing system, they create a "high-end" image, allowing them to sell at high prices.

However, many failed projects only see the superficial indicators of Apple's "self-developed chips." "Self-developed chips," if only for "using chips," or merely focusing on paper parameters while ignoring the differentiation of software and the enhancement of ecological capabilities that jointly promote the optimization of user experience, may not be valuable.

Software defines everything, including "success and failure."

Whether it's CPU or GPGPU, there needs to be differentiation in the software ecosystem compared to existing mature products to achieve added value, but this does not mean that everything needs to be reconstructed and innovated, such as the instruction system—more instruction systems require more investment in the software ecosystem, making "unification" extremely difficult.

As mentioned earlier, investment in software is even greater than investment in hardware development, and the negative phenomenon of falling behind in software is currently prevalent in the field of domain-specific architectures (DSA) and other xPU development areas. For example, many domestic intelligent computing centers have large investment scales, but due to issues like incomplete supporting software, their actual utilization rates are not high, which is actually a consequence of software not keeping pace with hardware Recalling the era of architectural disputes, the gods fought fiercely, and in the end, only a few architectures survived.

In fact, architectural innovation does not necessarily require a new architecture; it can also be achieved within the existing instruction set framework. RISC-V happens to have strong support in this regard.

For example, foreign companies like Tenstorrent have expanded the RISC-V instruction set to support AI-specific sub-instruction sets, thereby developing AI acceleration computing solutions based on RISC-V. Additionally, many universities and research institutions both domestically and internationally have expanded cryptography-related sub-instruction sets on RISC-V and have implemented support for post-quantum cryptography based on this.

Therefore, we call for: using RISC-V as a unified instruction system, with all CPU/GPU/xPU developed based on RISC-V and its extensions, to avoid redundant labor and unnecessary waste of research and development resources.

Author of this article: Tang Zhimin, Dean of the School of Computing and Microelectronics at Shenzhen University of Technology, Chairman of Xiangdi Xian. Source: Tencent Technology, original title: "The 'New Decade' of China's Computing Power Chips"

Risk Warning and Disclaimer

The market has risks, and investment requires caution. This article does not constitute personal investment advice and does not take into account the specific investment goals, financial situation, or needs of individual users. Users should consider whether any opinions, views, or conclusions in this article align with their specific circumstances. Investment based on this is at one's own risk