The "Core Controversy" of the AI Bubble: Can GPUs Really "Last" for 6 Years?

Investment bank Bernstein believes that it is reasonable to set the depreciation period for older GPUs at 6 years, given that their operating costs are much lower than rental prices and market demand is strong, which proves that the current high profits of tech giants are not financial embellishments. However, "big short" Michael Burry warns that AI hardware iterates rapidly, with an actual lifespan of only 2-3 years, and that the giants' extension of depreciation is merely an accounting "trick" to inflate short-term profits

Author: Long Yue

Source: Hard AI

In the heated debate surrounding AI investments, a core accounting issue is becoming a new battleground for both bulls and bears: What is the true economic lifespan of GPUs, the cornerstone of computing power? The answer to this question directly relates to the hundreds of billions of dollars in profits for tech giants and the reality of the current AI valuation bubble.

According to a report released by investment bank Bernstein on November 17, analysts believe that setting the depreciation cycle for GPUs at 6 years is reasonable. The report points out that even considering technological iterations, the cash costs of operating older GPUs are very low compared to their market rental prices, making it economically feasible to extend their usage.

This finding suggests that for large cloud service providers like Amazon, Google, and Meta, their current depreciation accounting policies are largely fair and not intentionally embellishing financial statements. This directly defends the profitability of tech giants.

However, this viewpoint sharply contrasts with the pessimistic sentiment in the market. Critics, represented by "Big Short" Michael Burry, who predicted the 2008 financial crisis, argue that the actual lifespan of AI chips and similar devices is only 2-3 years. Burry warns that tech giants are playing a dangerous accounting "trick" aimed at artificially inflating short-term profits.

Bernstein: 6-Year Depreciation is Economically Feasible

Analyst Stacy A. Rasgon explicitly states in the report that GPUs can be profitably operated for about 6 years, making the depreciation accounting of most hyperscale data centers reasonable.

The foundation of this conclusion lies in economic analysis: The cash costs of operating an old GPU (mainly electricity and hosting fees) are far lower than the market rental prices for GPUs. This means that even if hardware performance is no longer top-notch, as long as the market demand for computing power remains strong, continuing to operate old GPUs can yield considerable contribution profits.

Data shows that even the 5-year-old NVIDIA A100 chip still allows suppliers to achieve "comfortable profits." Bernstein's calculations indicate that only when GPUs become as old as the 7-year-old Volta architecture do they start approaching the cash cost breakeven point. Therefore, the report concludes that the 5-6 year depreciation cycle adopted by large cloud service providers (Hyperscalers) is economically justifiable.

Economic Feasibility: High Rental Prices and Low Operating Costs

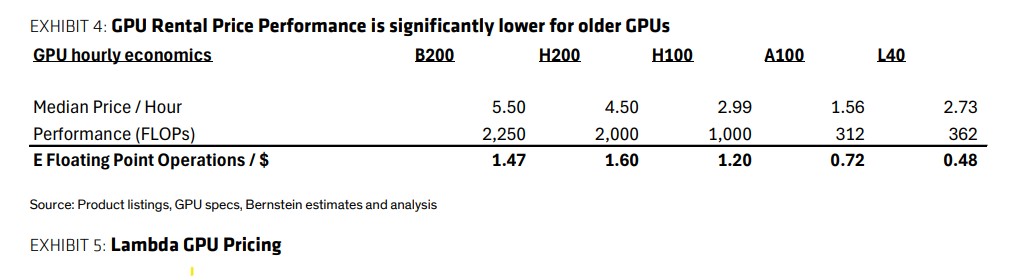

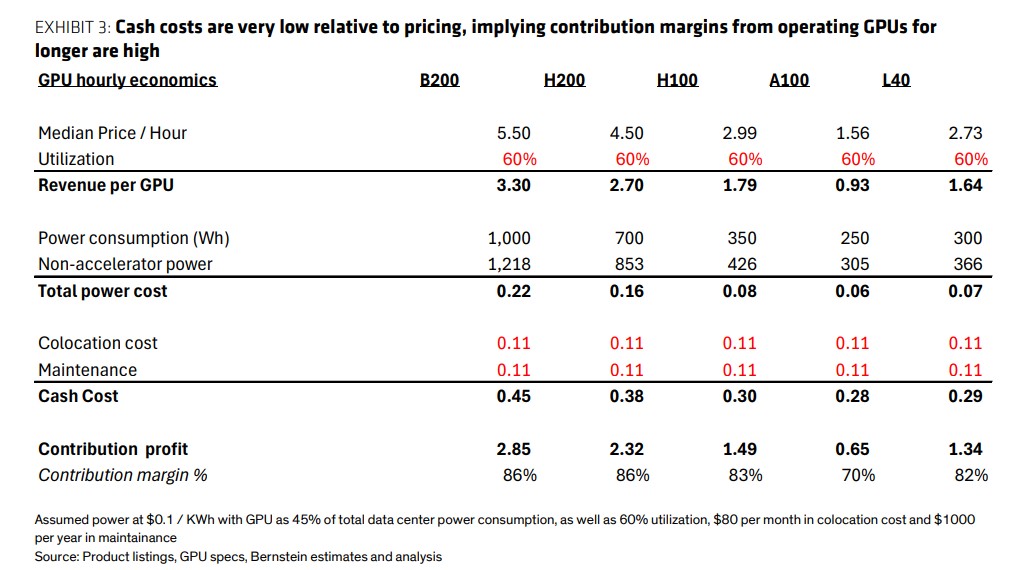

Bernstein's analysis breaks down the operational economics of GPUs. The rental prices for GPUs are an order of magnitude higher than their operating cash costs.

Taking the A100 chip as an example, the report estimates its contribution profit margin to be as high as 70%. Specifically, its hourly revenue is approximately $0.93, while the cash costs, including electricity, hosting, and maintenance, are only $0.28. This significant profit margin provides cloud vendors with ample economic motivation to extend the operating time of GPUs as much as possible The report also mentions that the value loss of GPUs is not linear. They typically lose 20-30% of their value in the first year due to "burn-in" issues and the market's preference for the latest hardware, but after that, their value remains relatively stable.

In addition, the report confirms through communication with industry participants that GPUs can physically be used for 6-7 years or even longer. Most of the early "burn-out" cases are attributed to configuration errors during the initial phase of new hardware deployment (i.e., "burn-in" stage issues), rather than design life defects of the GPUs themselves.

Strong Demand for Computing Power, Old Chips Still Have a Market

The current market environment is another key factor supporting the value of older GPUs. The report emphasizes that in a "compute-constrained" world, the demand for computing power is overwhelming. Leading AI labs generally believe that more computing power is the path to higher intelligence, so they are willing to pay for any available computing power, even older models.

Industry analysts point out that the computing capacity of the A100 is still nearly fully booked. This indicates that as long as demand remains strong, older hardware with lower operational efficiency still has its value.

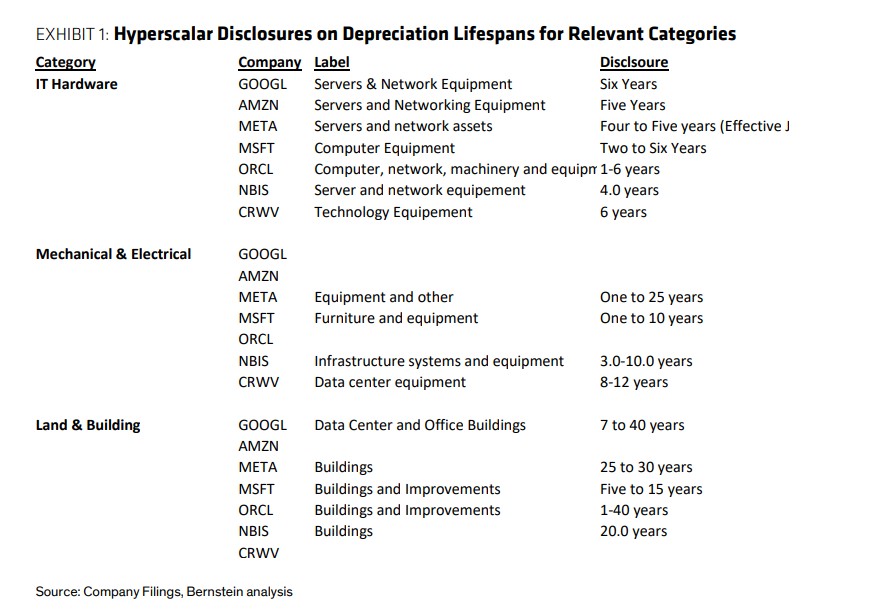

Depreciation Choices of Tech Giants

According to company documents, Google has a depreciation period of six years for its servers and network equipment; Microsoft ranges from two to six years; Meta plans to extend the useful life of some servers and network assets to 5.5 years starting in January 2025.

It is worth noting that not all companies are extending their depreciation periods. Amazon has shortened the expected useful life of some servers and network equipment from six years to five years in the first quarter of 2025, citing the accelerated development of AI technology. This move provides some support for the bearish argument and shows differing judgments within the industry regarding the speed of hardware iteration.

"Big Short" Warning: Accounting "Tricks" and Inflated Profits

In stark contrast to Bernstein's optimistic view, "Big Short" Michael Burry issued a stern warning through his social media platform on November 11. He believes that tech giants are underestimating depreciation by extending the "effective useful life" of assets, artificially inflating their earnings.

Wallstreetcn reported that Burry pointed out that the product cycle of computing devices such as AI chips and servers typically only lasts 2 to 3 years, but some companies, including Meta, Alphabet, Microsoft, Oracle, and Amazon, have extended their depreciation cycles to 6 years. He expects that from 2026 to 2028, this accounting treatment will result in an inflated profit of $176 billion for large tech companies Burry specifically pointed out that by 2028, Oracle's profits may be overstated by 26.9%, while Meta's profits may be overstated by 20.8%.

It is worth noting that Burry's views are not isolated. As early as mid-September, Bank of America and Morgan Stanley had warned that the market severely underestimated the true scale of current AI investments and was unprepared for the future surge in depreciation costs, which could lead to the actual profitability of tech giants being far below market expectations. This series of warnings, combined with Burry's earlier disclosure of his bearish options position on NVIDIA, has intensified market concerns about the valuations of AI-related stocks.

This article is from the WeChat public account "Hard AI". For more cutting-edge AI news, please click here.