All in the same boat, is OpenAI a divine pearl or a demonic pill?

$OpenAI(OpenAI.NA) has been extremely busy lately, dabbling in e-commerce, social media, and now launching a browser (Dolphin Research's Commentary), emmmm... it seems to have made enemies with half of the 'Seven Sisters'.

With a trending topic every three days, Dolphin Research can hardly keep up. On the surface, OpenAI is thriving, poised to become the next star to reshape humanity's trajectory. However, behind the star's glow, Dolphin Research senses OpenAI's anxiety.

The greater the responsibility, the greater the pressure. Starting from 2026, OpenAI's commercialization capability is not just the demand of initial investors but has become the lifeline of a trillion-dollar industry chain. The valuation bubble that has been inflated demands more stringent conditions, as the valuation itself suffers from time cost depreciation. Therefore, it is not just the scale of commercialization that is tested, but also the growth rate of this scale. Once the growth rate slows, even if the industry is still progressing, the valuation bubble will burst first. The script for the late 1990s internet stock crash has long been written.

That said, Dolphin Research does not wish for the bubble to burst, as a healthy and controllable bubble is actually beneficial for the development of emerging industries—valuation premiums attract more capital, allowing the real economy to have the funds to produce and expand to educate the market and penetrate users. The data from user usage feeds back into the product for functional iteration, ultimately leading to user experience paying off, industry profitability, and investor returns.

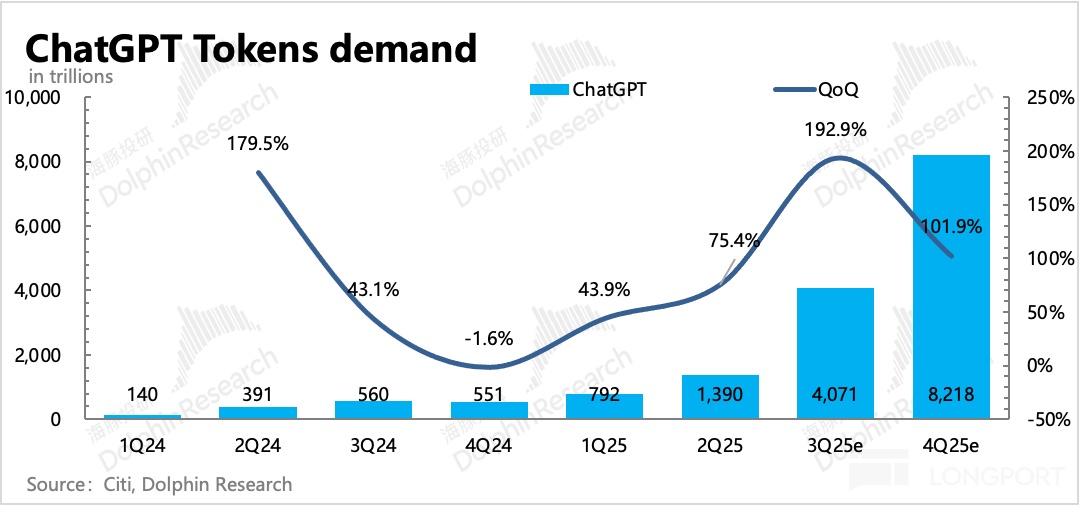

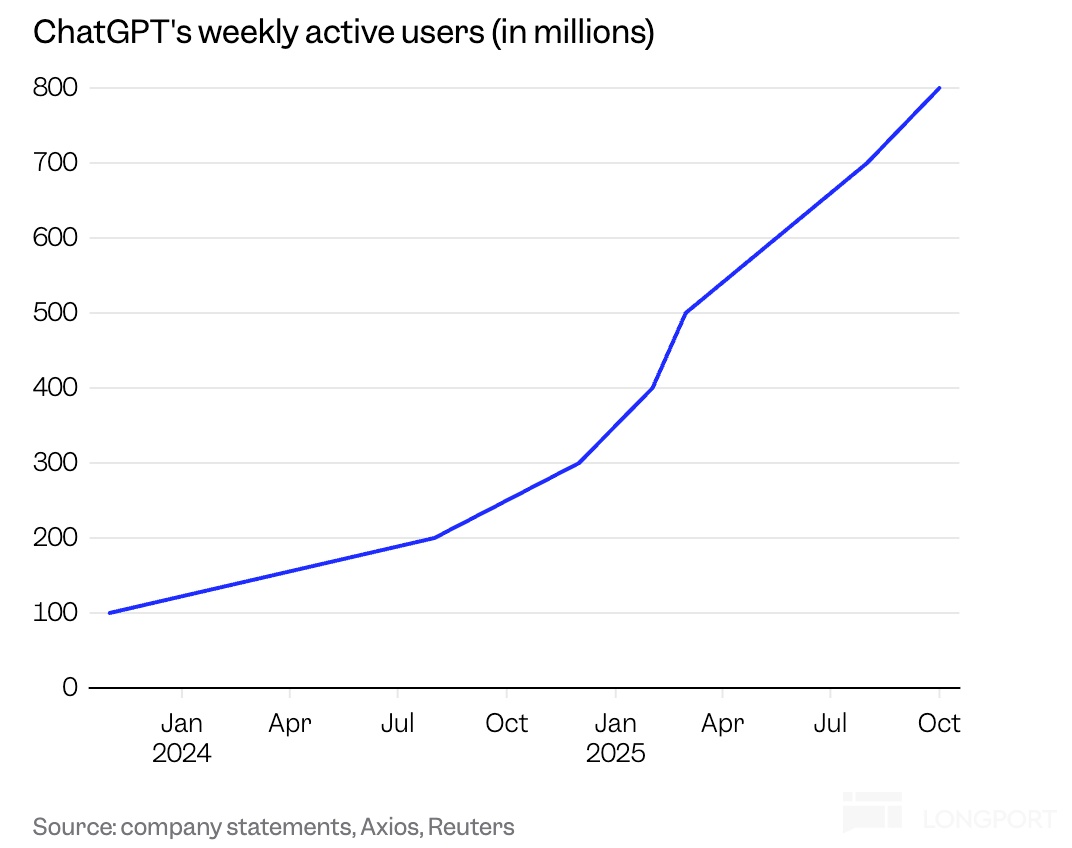

In the case of OpenAI's severe mismatch between revenue and expenditure, the ecosystem associated with OpenAI is undoubtedly the biggest risk point in the industry. Although ChatGPT has already reached a scale of 800 million users, with user growth speed surpassing all successful internet platforms, the demand for computing power behind it and the supply orders OpenAI has already committed to are growing even faster than user scale.

Taking advantage of OpenAI's temporary lull these days, Dolphin Research will first calculate OpenAI's accounts, relying entirely on the expansion of the balance sheet, to see if it can sustain cash flow before commercialization scales up. And, how much commercialization is needed to meet the subsequent high computing power costs, basic company operations, and shareholder-required returns? Is the company's 2030 revenue expectation of $200 billion enough? Of course, the most critical question is, can OpenAI achieve it?

Dolphin Research will discuss the above issues in two articles. The first part will focus on OpenAI's computing power industry chain ecosystem and cash gap, while the second part will estimate OpenAI's commercialization potential to see if there is sufficient capacity to bear it.

I. OpenAI's Computing Power 'Confused Accounts'

1. Interests Tied! This Complex Ecosystem Relationship...

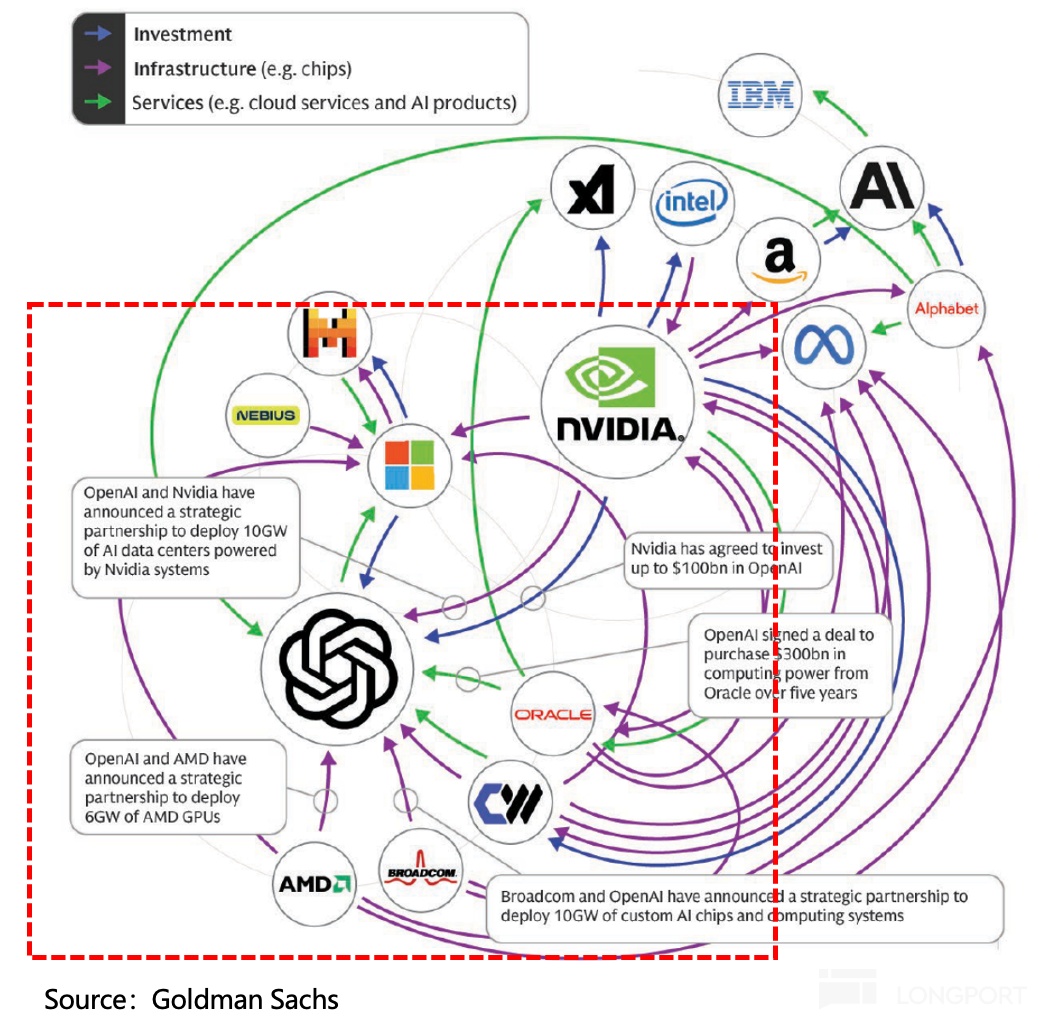

Since the end of September, the AI computing power industry chain has experienced a wave of 'big chaos', with competition, cooperation, backstabbing, or equity relationships among various companies being extremely tangled. Dolphin Research has sorted out some key news, and everyone can first feel this complex relationship through the relationship diagram.

Among them, OpenAI is definitely the protagonist of protagonists. The relationship between various companies in the industry chain and it, speaking of which is a client-supplier relationship, is strategic cooperation. In fact, OpenAI has firmly tied the upstream AI infrastructure giants in the industry chain with data center investments involving up to 30GW of computing power and a $1.5 trillion ecosystem (total data center cost of $50 billion/GW).

Below, Dolphin Research will discuss in detail:

(1) Core of the Ecosystem: Stargate

The most complex cooperative ecosystem is OpenAI's Stargate project, which has deeply linked OpenAI with $Oracle(ORCL.US), $NVIDIA(NVDA.US), $SoftBank Group Corp.(9984.JP), and other companies. The market's criticism of 'capital circulation' and 'repeated stacking of computing power' lies here.

As early as 2024, media reported that Microsoft and OpenAI planned to invest $100 billion to build the Stargate AI supercomputer, which would be equipped with millions of dedicated server chips to support OpenAI's AGI development.

At that time, it was still the period when OpenAI and $Microsoft(MSFT.US) were in a sweet relationship. Microsoft had cumulatively invested $13 billion in OpenAI, besides equity, OpenAI provided Microsoft with some technology licenses, exclusive supply of OpenAI's cloud computing needs, exclusive agency for ChatGPT's API interface, etc.

But after entering 2025, as OpenAI completed its reorganization, the relationship between OpenAI and Microsoft began to become subtle, with occasional rumors of a breakdown and cooling of relations between OpenAI and Microsoft.

The core contradiction here lies in the issue of expanding computing power supply. As a mature listed company, Microsoft's financial rigor is the bottom line, while OpenAI has been demanding Microsoft to quickly expand data centers, requiring a scale of hundreds of GW. This reminds Dolphin Research of Sam's 'astonishing' statement this year—OpenAI's development will require 250GW of computing power consumption by 2030.

250GW is simply an enormous pie that Microsoft cannot swallow. A simple measure, 1GW of power consumption is equivalent to $50 billion in infrastructure investment, requiring trillions of dollars in investment. At the same time, 1GW is equivalent to the simultaneous electricity consumption of 1 million American households, so 250GW is equivalent to 250 million households, while the total population of the United States is just over 300 million...

Seeing OpenAI complain that Microsoft was hindering its development, Microsoft finally compromised, lifting the exclusive computing power supply rights for OpenAI's inference part, allowing OpenAI to find other cloud platforms to stockpile computing power. After that, OpenAI found Oracle, Google, NVIDIA, AMD, and Broadcom, among others.

The relationship between OpenAI and Microsoft also changed. The Stargate project, which was initially intended to be built with Microsoft's help, was greatly upgraded after changing investors at the beginning of 2025, with the total investment amount rising from last year's $100 billion to $500 billion over the next four years, aiming to build a data center cluster with 10GW of computing power.

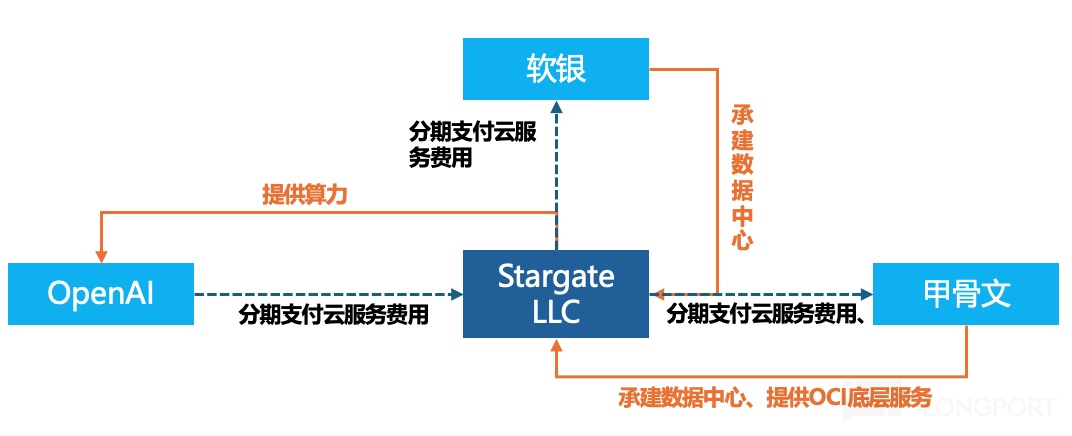

First, Stargate became an independent company, with shareholders including OpenAI, Oracle, SoftBank, and MGX (UAE AI Investment Fund), each responsible for data center construction and operation, energy development, and funding sources. OpenAI and SoftBank each hold 40% of the equity, while Oracle and MGX collectively hold the remaining 20%.

This newly established company, from the perspective of resource endowment, is essentially a cloud service provider with absolute voice expectations from OpenAI. Most of the GPU chips in this cloud service provider's data centers are purchased from NVIDIA, especially the earliest Abilene, which is almost entirely used, including but not limited to the GB200, GB300, and VR200 series.

At the end of September, NVIDIA further deepened its interest binding by investing a total of $100 billion in OpenAI through phased investments (injecting $10 billion for each 1GW deployed) and obtaining corresponding OpenAI equity (also calculated in phases, as long as OpenAI's valuation continues to rise, NVIDIA's shareholding ratio will not exceed 10%).

And the once close ally, Microsoft, is now only participating as a non-shareholding technical partner, with its role and status significantly weakened.

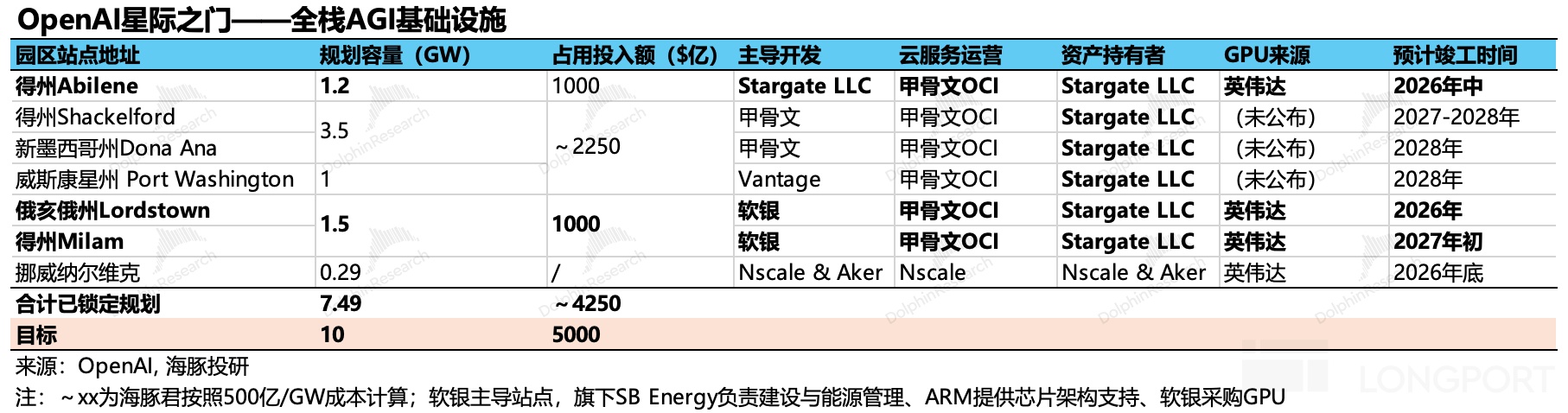

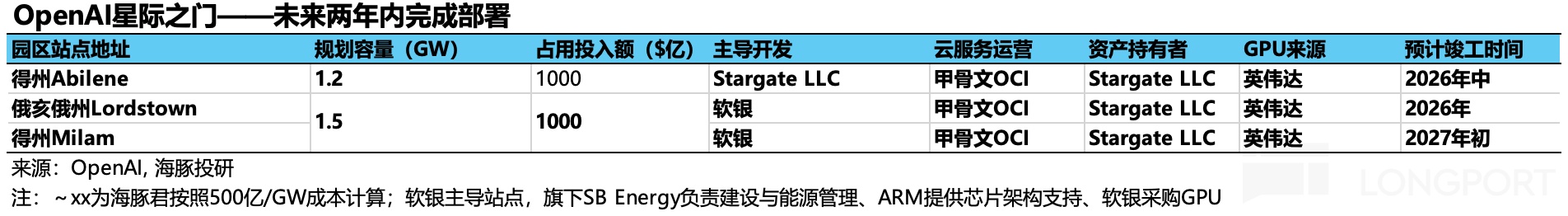

As of now, more than 70% of the deployment target has been planned, with the main force being seven data center sites in the United States, and overseas, there are clear plans mainly for sites in Norway, with most data center parks expected to be completed after 2026. The relatively fast ones are the SoftBank-led cluster and the earliest invested Abilene site, which can be put into operation a year later, with a total computing power of 2.7GW.

(2) Hooking up with AMD: Plan B for GPUs

In early October, while everyone was still immersed in the sweet binding between OpenAI and NVIDIA, the cunning OpenAI left a backup plan, immediately announcing that it would deploy 6GW of AMD Instinct series GPUs through cloud service providers over the next five years. The first deployment is 1GW of $AMD(AMD.US) Instinct MI450 GPUs. In other words, by finding a backup, it avoids being coerced due to over-reliance.

As a return, AMD issued up to 160 million warrants (accounting for 10% of the current equity) to OpenAI in six batches according to the deployment rhythm, with an exercise price of 1 cent, basically equivalent to giving them away for free.

At the same time, the exercise threshold is not high. Although it only mentioned that the first batch of warrants would take effect after the completion and supply of the 1GW deployment, logically speaking, the subsequent batches should also take effect according to each 1GW deployment. Except for the exercise threshold of the sixth batch of warrants, which requires AMD to reach a market value of $1 trillion (equivalent to doubling the current stock price), which may be a bit difficult, the conditions for the effectiveness of the other warrants inevitably make people suspect that OpenAI can completely cash out by converting shares to pay for the purchase of AMD chips without occupying too much cash flow.

Of course, as AMD's market value rises, OpenAI's cash flow payment pressure also decreases. If OpenAI waits until $1 trillion to convert all the warrants into shares and sell them, it will obtain at least $100 billion in funds, while OpenAI's procurement cost is estimated to be around $120 billion (under normal high-end GPU power consumption, 6GW corresponds to about 5 million GPUs, calculated at a comprehensive average price of $20,000 to $30,000 per GPU). This operation is undoubtedly aimed at increasing AMD's market value through interest binding as the ultimate goal.

(3) Partnering with Broadcom: GPUs also have substitutes

Not satisfied with 'backstabbing' NVIDIA, OpenAI simply found backups to the end, turning to $Broadcom(AVGO.US) to develop custom ASICs with 10GW of computing power, mainly for proprietary inference scenarios. This cooperation plan will start deployment in the second half of next year and is expected to complete computing power construction by the end of 2029.

This ASIC design is handled by OpenAI, while manufacturing, deployment, and network integration are provided by Broadcom. Like AMD, these ASICs will also be deployed in Stargate data centers and partner (such as Microsoft, Oracle) data centers for operation and use.

2. Trillion-dollar Computing Power Investment 'All at Once', Actually OpenAI's Balancing Act?

Combining <1-3>, the above Stargate and direct cooperation with the upstream of the industry chain, plus the CoreWeave contract just signed in September, OpenAI has cumulatively planned a maximum of 31GW of new computing power supply for itself in the future (of which 26GW is newly added in the past month), corresponding to a total deployment value of up to $1.5 trillion in the industry chain.

Among them, NVIDIA's 10GW is purely new, belonging to the leasing contract directly signed between OpenAI and NVIDIA. The total leasing cost is expected to be 10%-15% cheaper than purchasing chips, and the key is that switching from purchasing to leasing can help OpenAI alleviate the cash flow burden of one-time capital investment.

But Dolphin Research believes that Oracle's 4.5GW and AMD, Broadcom's computing power planning cannot rule out overlap. Currently, Oracle's 4.5GW of new computing power demand, Oracle purchased $40 billion worth of NVIDIA GPUs in May, which is only enough to correspond to 1GW of capacity, and the news reported that it is specifically for the construction of the Texas Abilene data center.

In other words, the 4.5GW of new computing power must either be moved from Oracle's current stock data centers or new data centers must be built. This involves the procurement of new GPUs. Either Oracle continues to purchase from NVIDIA or procures from elsewhere.

But OpenAI's cooperation with AMD and Broadcom also indicates that these chips need to be arranged and deployed in in-house data centers (i.e., Stargate) or partner data centers. This partner should mainly refer to Microsoft and Oracle.

It is known that Microsoft is mainly responsible for OpenAI's training computing power, while Oracle is responsible for inference computing power. Therefore, if there is an arrangement, then Broadcom's part should be deployed in Stargate (mostly operated by Oracle) or Oracle data centers. AMD may have a chance to be arranged on Microsoft, because OpenAI's cooperation requirement with AMD is that the MI450, which will be launched at the end of 2026, is intended for training.

This 30GW computing power load accounts for 60% of the total installed capacity of data centers in the United States for the entire year of 2024. Running at 70% utilization, converted into Tokens (calculated according to H100 GPU, 7B-70B different sizes of inference models), it can reach about 10 to 378 trillion trillion per year, which is 7 to 200 times the 14,000 trillion Tokens per year of OpenAI in 2025.

Although OpenAI's dream is very grand, with everything becoming AI in the future and computing power increasing rapidly, the key is why this large and round computing power demand is eager to lock in agreements and partners within a month?

Dolphin Research believes that the whole process can be attributed to interest games. But it seems that OpenAI is anxious about computing power supply, but it may actually be a balancing act aimed at locking in key partners in advance to strengthen the entire ecosystem.

(1) Oracle on the Table: Blessing or Curse?

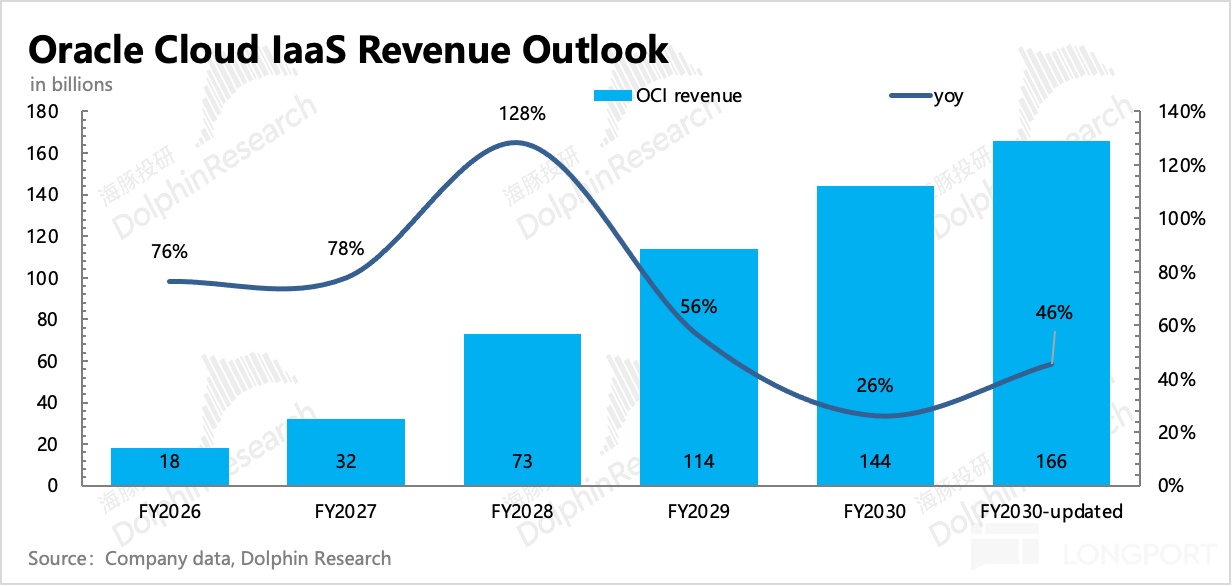

As the party most deeply tied to OpenAI, Oracle is also betting its fortune. The good thing is that future revenue growth is almost determined by OpenAI, and OpenAI will try to give it the most computing power demand orders, such as the $300 billion order starting in 2027. This led to the 'explosive' long-term growth guidance at the Q2 earnings meeting and the 'willful' upward adjustment at the AI World Conference:

But at the same time, as a shareholder of Stargate LLC, to ensure the project can proceed normally, when the funds invested in the early stage are not enough (if OpenAI does not raise enough funds in time), it can only grit its teeth and advance funds, especially for the two data center parks it is responsible for leading the construction of, it cannot be ruled out that it needs to pay out of pocket to buy GPU chips and other auxiliary hardware first. However, Oracle is not wealthy either, with an asset-liability ratio of 80%, as a data center developer and operator without a cash flow main business, it does not have much cash savings under heavy asset operations.

In addition, OpenAI also signed a direct lease contract with NVIDIA, which makes Oracle a bit awkward. And from the trend, Stargate's idle computing power in the future cannot be ruled out to be leased out (30GW is only peak demand, not normal).

Although Oracle holds a small portion of Stargate's equity, it does not prevent the business itself from being competitive. At that time, Oracle may become Stargate's contractor/maintenance/distributor, completely locking in OpenAI, or it may exit the Stargate alliance, but neither is a particularly good outcome.

(2) NVIDIA: Competing with CSP Giants for Industry Discourse Power

This time, NVIDIA's 10GW direct cooperation with OpenAI is actually an aggressive defense after Google and Oracle sided with OpenAI, especially since Google is using TPU. In the current computing power industry chain, due to the seller's market where supply is still less than demand, the mid-to-upstream is enjoying the dividends of industry development. But there is also open and covert competition between the two, especially among their respective giants, who are not convinced by each other.

Midstream CSPs want to cut down the high procurement costs of upstream GPUs, while upstream GPUs are eyeing the 40% profit cake of midstream. But if downstream demand giants choose to bind with one party, the industry chain premium of that party naturally rises. Especially when facing OpenAI, which has 800 million weekly active users, whether in terms of development prospects or the resource strength behind it, it will attract chip giants to lock in interest relationships, 'suffocating for dreams together!'.

Or it can be said that chip manufacturers can only believe, the massive orders promised by OpenAI bring unprecedented revenue increments to the three chip manufacturers. If they do not believe, how can they tell their growth story? The so-called big ship, big pie, this willingness to hold together is conceivable.

In addition, this transaction itself is not a loss for NVIDIA. Not only is the $100 billion investment phased in, but even if this $100 billion is counted as a cost, it is still a net profit for NVIDIA, just with a lower gross margin:

According to reports, the leasing cost is 10%-15% cheaper than the procurement cost, i.e., 350*85%=300 billion/GW, and after deducting the 'rebate' of 100 billion/GW, the actual net payment is 200 billion/GW, NVIDIA's normal cost is 350*25%=87.5 billion, the adjusted gross profit is 112.5 billion, and the adjusted gross margin is 32%, earning 150 billion less per GW than before.

But NVIDIA uses this part of the gross margin given up to lock in up to 10% of OpenAI's equity, to some extent equivalent to using physical goods as venture capital to exchange for equity.

But without the leasing model and this $100 billion investment, it may not be possible to exchange for a 10GW order, and more orders would flow into competitors like Broadcom and AMD. Ultimately, this investment became a FOMO game balance among chip manufacturers.

(3) AMD: So What If It's a Backup? After All, It's on Board

AMD is undoubtedly OpenAI's backup, a fallback left by OpenAI in the face of NVIDIA, but it is also destined to be regularized only under certain conditions (successful launch of MI450, additional computing power deployment).

From the total order amount and the current revenue scale, the elasticity is very high. On one side is an order of more than $100 billion in revenue over five years, and on the other is a trillion-dollar market value dream. It seems that the cooperation between OpenAI and AMD is a win-win.

But AMD is also deployed in batches, one GW at a time, but it also requires hundreds of billions of upfront investment. This money may not be fully prepaid by OpenAI before the deployment is completed, which requires AMD to advance funds. However, on the bright side, precisely because it is a backup, the computing power demand is unlikely to be immediately allocated to it, and even if funds are advanced, the scale is relatively controllable.

(4) Broadcom: The Fisherman of the Trend

Although the market believes that Broadcom has the highest uncertainty, after all, the cooperation details are disclosed the least. But Dolphin Research believes that the interest game between CSPs and GPU chip giants in the industry chain, such as NVIDIA directly bypassing CSPs and signing direct supply contracts with downstream customers like OpenAI, is tantamount to excluding CSP giants from the interest chain, which will inevitably accelerate the CSPs' self-developed ASIC chip process. And Broadcom, through years of cooperation with Google's TPU, is already quite familiar with this process and is undoubtedly a beneficiary. Although from the current perspective, OpenAI is not in a hurry to rely on ASIC computing power, self-developed chips are precisely the next step in penetrating CSPs.

III. Trillion-dollar Investment, Where Does the Money Come From?

The 31GW peak computing power that OpenAI has hoarded above supports a $1.5 trillion industry chain value. But this is only the 'maximum' expected investment cost after many years, not short-term investment or final cumulative investment, leading the market to be very worried about OpenAI's (2025 revenue may be $15 billion) payment pressure.

In fact, OpenAI's purchase of Stargate's cloud services is actually equivalent to being a downstream customer of Stargate, paying fees according to actual usage. As shown below, the real responsibility for funding is Stargate, this joint venture company. Although OpenAI holds 40% of the equity and has to bear certain responsibilities, it can always find someone to share the burden.

But the market is also worried about whether SoftBank, Oracle, and WAX, the joint responsible parties besides OpenAI, have the ability to bear the cost, after all, these companies do not have huge stable cash flows.

Dolphin Research believes that the concern is not superfluous. But the actual payment pressure still needs to be calculated. How much of this gap needs to be filled by OpenAI's commercialization or refinancing, and even Oracle and others need to advance funds for a long time.

OpenAI's overall planning cycle is basically five years, although all are paid in installments: NVIDIA is a five-year lease contract (skipping CSP direct lease), AMD and Broadcom are paid according to the actual deployment rhythm, AMD also comes with stock conversion and cash out, Oracle is also a five-year lease, and CoreWeave is also a multi-year lease.

But it seems that combined, the pressure on short-term cash payment requirements is still not small.

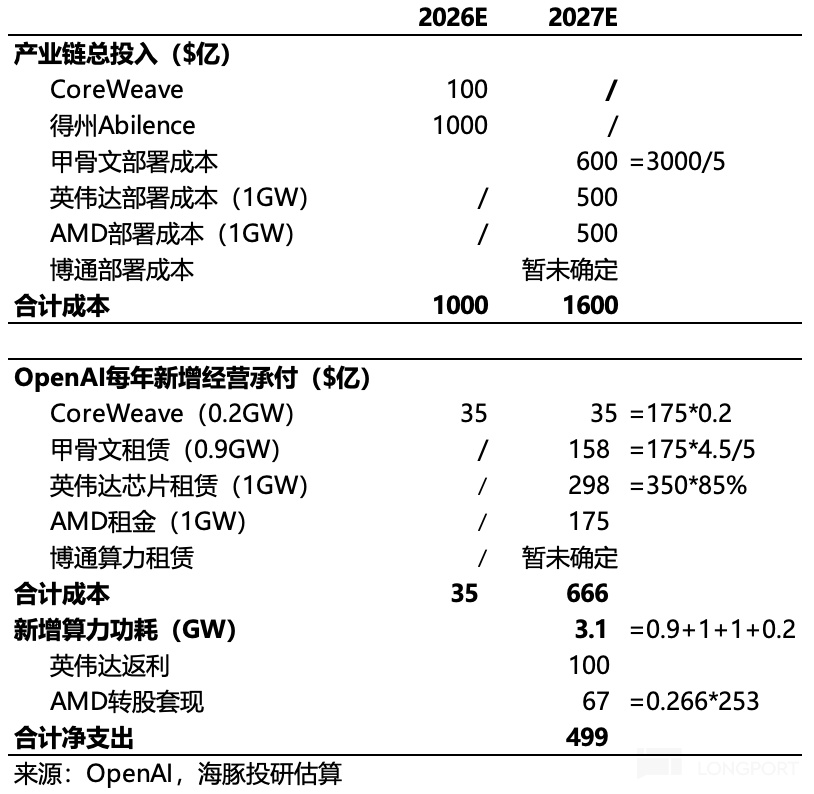

(1) Data Center Investment: Especially according to the current plan, the three data centers in Stargate (Texas Abilene, Ohio Lordstown, Texas Milam) will all be completed and deployed within the next two years. This also means that the payment cycle is coming soon, which is the common pressure faced by the project parties behind Stargate (OpenAI, Oracle, SoftBank, MGX).

(2) In addition, NVIDIA's first 1GW and AMD's 1GW will also be completed within one year, corresponding to a deployment cost of 350*85% (rental discount) = $29.8 billion and $50 billion (including $17.5 billion in chip costs), respectively.

(3) Oracle's $300 billion 4.5GW computing power order will start paying $60 billion in 2027.

The above 1-3 combined, Stargate, represented by OpenAI, will need to pay $160 billion by 2027. And the actual cost falling on OpenAI is the cost of leasing computing power on demand and the cost allocated to itself in the upfront investment, totaling more than $60 billion.

But NVIDIA's $10 billion investment and AMD's first batch of warrant conversion (2,660 shares) cash out also have more than $6 billion (calculated at the current stock price, the actual interest rate cut cycle, the valuation increase, and cash out amount will also increase together), after deducting the final net expenditure of $50 billion. Note that this only calculates the investment with new partners, and the original cloud service procurement with Microsoft is also continuing. (Due to the uncertainty of the actual situation, the specific details are unclear, and the estimate below is relatively rough, for general reference only).

Currently, Stargate's initial funding is $100 billion, with OpenAI and SoftBank as the main leaders, each investing $19 billion in the first phase of $100 billion, Oracle and MGX jointly investing $7 billion, and the remaining $55 billion planned to be financed through bond issuance.

The key support point here is whether OpenAI has such commercialization capabilities in the future to fulfill these massive orders worth trillions?

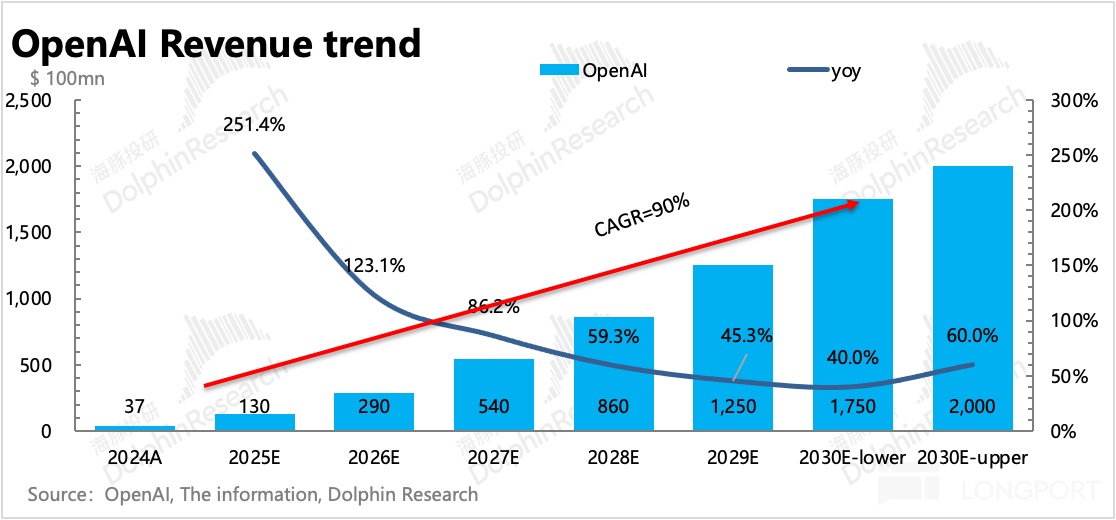

In the face of doubts about payment ability, OpenAI's commercialization outlook is as follows: 2025 revenue is expected to be $13 billion (implying second-half revenue of $8.7 billion, a 100% quarter-on-quarter growth), 2029 target is $125 billion, 2030 target is $174-200 billion, with a CAGR of over 90% in the next five years.

Under the above 2027 expenditure estimate, the expected revenue of $54 billion just covers the cost of new computing power, but there are also R&D personnel, marketing investment, and basic company operation expenses to cover, which account for about one-third of the revenue, or $15-20 billion.

This gap can only hope for refinancing, but the question is, can OpenAI's grand revenue vision of $200 billion in five years be realized?

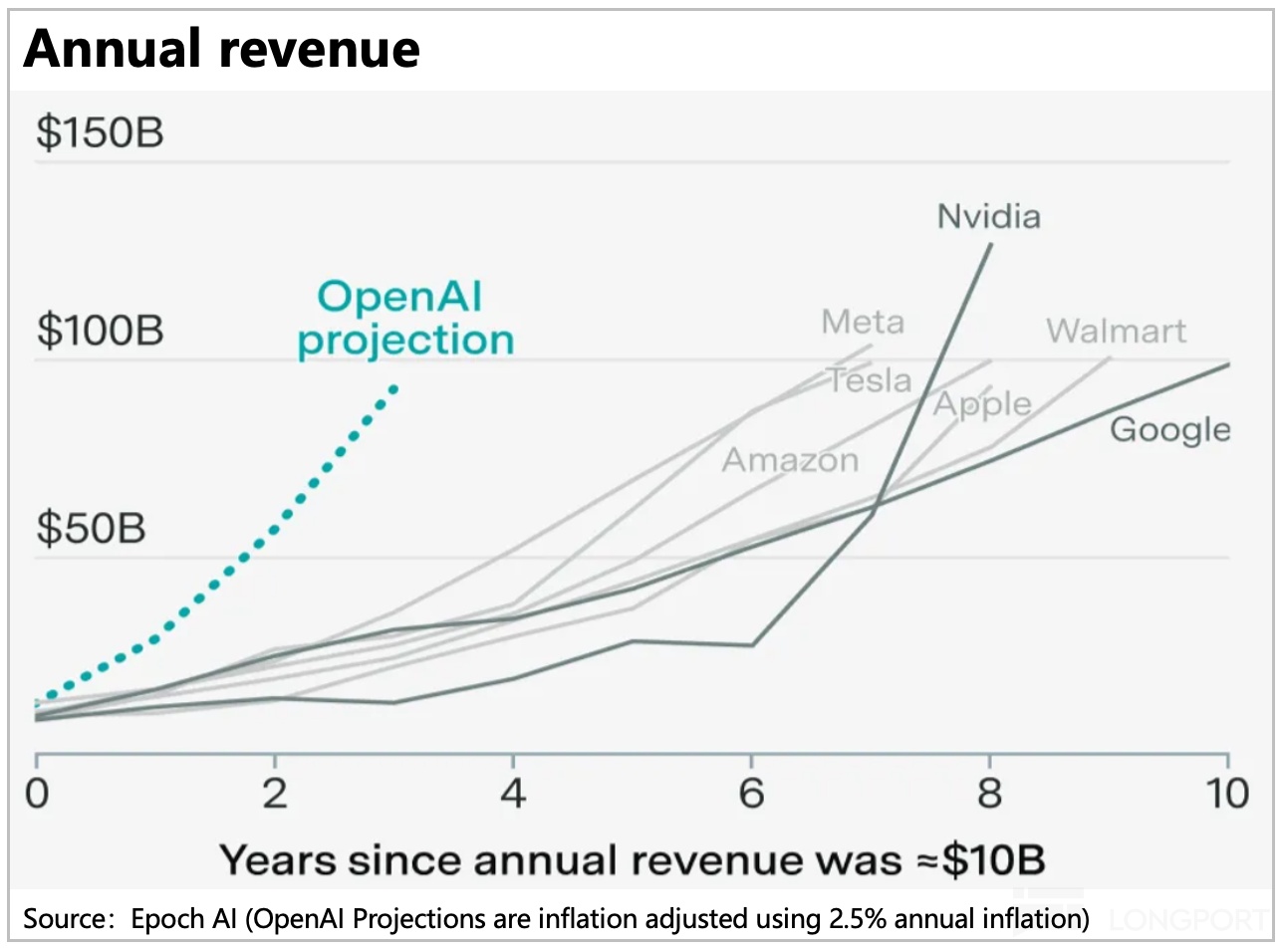

A tenfold revenue growth in five years, and starting from a scale of hundreds of billions, is absolutely challenging. In other words, going from 0 to $100 billion in revenue in five years. Looking back over the past few decades, only NVIDIA, which has achieved over 90% absolute dominance in the AI GPU field and is in the highest value chip design segment of the industry chain, has had such a 'growth slope'.

However, just as ChatGPT emerged out of nowhere, from initial skepticism to eventual conviction, becoming the exclusive synonym for AI chatbots, can OpenAI replicate the myth of ChatGPT? In the next article, Dolphin Research will delve into OpenAI's revenue side—commercialization prospects, and the potential impact on existing giants. Stay tuned!

<End Here>

Risk Disclosure and Statement of this Article:Dolphin Research Disclaimer and General Disclosure

The copyright of this article belongs to the original author/organization.

The views expressed herein are solely those of the author and do not reflect the stance of the platform. The content is intended for investment reference purposes only and shall not be considered as investment advice. Please contact us if you have any questions or suggestions regarding the content services provided by the platform.